Deep Learning in Practice : Train and deploy using fastai

Book notes: Deep Learning for Coders

Part I : Deep Learning in Practice

Chapter 1 : Your Deep Learning Journey

Machine learning glossary

Pre-trained models: When using a pretrained model, the last layer of the architecture will be removed since it was trained to perform on a different task, and it will be replaced with one or more layers initialized with random weights, this last part of the model is known as the head.

Fit(synonym of train) : the process of updating model's parameters such that its prediction matches with the target labels.

Fine-Tuning: A transfer learning technique, that updates parameters of the pretrained model by training for additional epochs using different task from that used for pretraining.

Other application of Deep Learning

One of the important application of DL is segmentation which consist of building a model that can recognize the content of every pixel of an image (classify each pixel to corresponding object), The implementation of this using fast.ai and a subset the CamVid dataset from the paper “Semantic Object Classes in Video: A High-Definition Ground Truth Database” by Gabriel J. Brostow et al.:

path = untar_data(URLs.CAMVID_TINY)

dls = SegmentationDataLoaders.from_label_func(path, bs=8,

fname = get_image_files(path/"images"),

label_func = lambda o: path/'Labels'/f'{o.stem}_P{o.suffix}',

codes = np.loadtxt(path/'codes.txt', dtype=str)

)

learn = unet_learner(dls, resnet34)

learn.fine_tune(8)

Building models from tabular data

This is more widely commercially used, let's understand what is Tabular Data

Tabular data : Data stored in a table, spreadsheet, database or csv file. the goal of a tabular model is to predict one of the columns of the table based on the other columns.

Let's take a look on how we could implement this using fastai.tabular, here we are trying to predict whether a person is a high-income earner based on his socioeconomic information.

from fastai.tabular.all import *

path = untar_data(URLs.ADULT_SAMPLE)

dls = TabularDataLoaders.from_csv(path/"adult.csv", path=path, y_names="salary", cat_names=['workclass', 'education', 'marital-status', 'occupation',

'relationship', 'race'],

cont_names = ['age', 'fnlwgt', 'education-num'],

procs = [Categorify, FillMissing, Normalize])

learn = tabular_learner(dls, metrics=accuracy)

learn.fit_one_cycle(3)

Four powerful concepts introduced by Arthur Samuel, gave the building blocks for the process of training a neural network model

- the idea of Weights assignment

- the fact that every weight assignment has an actual performance.

- the need for an "automatic means" for testing the performance.

- the need for an automatic process for improving the performance by updating the weights assignments.

Chapter 2 : From model to Production

Gathering Data

We will be using the Bing Image Search API as part of the Azure Cognitive Services.

First will need to get the API key from the session we've created using Azure services. Now we just need to export the key to our execution environment, then all we'll need is to use (fast.ai) search_images_bing function to search and download the images.

#loop through the three types of beers for each one we search for it corresponding

#images (~150)

bear_types = 'grizzly', 'black', 'teddy'

path = Path('bears')

if not path.exists():

path.mkdir()

for o in bear_types:

dest = (path/o)

dest.mkdir(exist_ok = True)

results = search_images_bing(key,f'{o} bear')

download_images(dest, urls=results.attrgot('contentUrl'))

fns = get_image_files(path)

failed = verify_images(fns)

failed.map(Path.unlink)

From Data to DataLoaders

To turn our downloaded images into a dataset, we'll need to go through the process of creating DataLoaders, we need to specify four things to fast.ai

- what type of data are we working on

- what is the list of items

- how to get those items labels

- how to get then validation set

Next we'll be using the fast.ai data block API , this will allow us to fully customize the creation of our DataLoaders.

bears = DataBlock(blocks=(ImageBlock, CategoryBlock), get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=parent_label, item_tfms=Resize(128))

bears = bears.new(item_tfms=RandomResizedCrop(128, min_scale=0.3))

dls = bears.dataloaders(path)

dls.train.show_batch(max_n=4, nrows=1, unique=True)

Data Augmentation

with fast.ai aug_transforms function we can do just that

bears = bears.new(item_tfms=Resize(128), batch_tfms=aug_transforms(mult=2))

dls = bears.dataloaders(path)

dls.train.show_batch(max_n=8, nrows=2, unique=True)

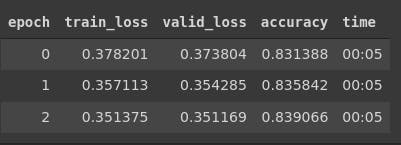

Now we'll just have to train our model and use it to clean our data

bears = bears.new(item_tfms=RandomResizedCrop(224,min_scale=0.5), batch_tfms=aug_transforms())

dls = bears.dataloaders(path)

learn = cnn_learner(dls, resnet18, metrics=error_rate)

learn.fine_tune(4)

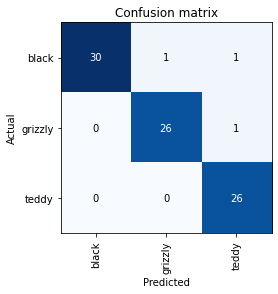

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

notice that we miss-classify only 3 images

Now let's sort our data by Loss and see why does terrible on those

interp.plot_top_losses(5, nrows=1)

cleaner = ImageClassifierCleaner(learn)

cleaner

#After deleting or changing labels(with GUI) we should do this on the actual data

for idx in cleaner.delete(): cleaner.fns[idx].unlink()

for idx, cat in cleaner.change(): shutil.move(str(cleaner.fns[idx]), path/cat)

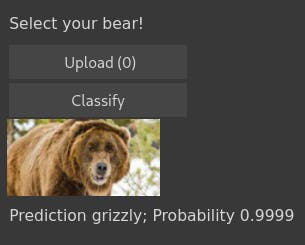

Turn our model into a Notebook App

If we were to build a real app we'll have to export our moded (architecture + parameters)

learn.export()

for our case we'll be building the app on the same Jupyter Notebook using IPython widgets.

#first we need a Uploader button

btn_upload = widgets.FileUpload()

#We are using Output widget to display the image

out_pl = widgets.Output()

#to display the result message

lbl_pred = widgets.Label()

#the classify button

btn_run = widgets.Button(description='Classify')

#event lauched on_click

def on_click_classify(change):

img = PILImage.create(btn_upload.data[-1])

btn_upload.data = []

out_pl.clear_output()

with out_pl: display(img.to_thumb(128,128))

pred, pred_idx, probs = learn_inf.predict(img)

lbl_pred.value = f'Prediction {pred}; Probability {probs[pred_idx]:.04f}'

#event listener

btn_run.on_click(on_click_classify)

#pack things up and place it on a vertical box

VBox([widgets.Label('Select your bear!'), btn_upload, btn_run, out_pl, lbl_pred])

GitHub repo : https://github.com/Aymen311/bear_species_classifier