Deep Intuition about Image Classification : Building a Pet breeds classifier

Book notes: Deep Learning for Coders

Pet Breeds classification

this task is actually more challenging than the cat/dog example.

#download the dataset

from fastbook import *

path = untar_data(URLs.PETS)

pets = DataBlock(blocks=(ImageBlock, CategoryBlock), get_items=get_image_files,

splitter=RandomSplitter(seed=42),

get_y=using_attr(RegexLabeller(r'(.+)_\d+.jpg$'), 'name'),

item_tfms = Resize(460),

batch_tfms=aug_transforms(size=224, min_scale=0.75))

dls= pets.dataloaders(path/'images')

#fine tune the model

learn = cnn_learner(dls, resnet34, metrics=error_rate)

learn.fine_tune(2)

Cross entropy loss

this the standard loss used by the fastai library, since we're performing a image classification task, this loss works for more than two categories and it result in a faster computation.

the (softmax) loss function works very well, but we can make it even better by changing the range of the output values, this is very important because 0.999 is 10x bigger than 0.99, thus it's difficult for the previous function to emphasis this difference, log function will enable us to map our values to -infinity, +infinity range.

One thing we can do is taking the log of the softmax and then applying the nll_loss (this will take the mean of negative or the positive log of our probs), one thing we need to know is the Pytorch function doesn't apply the log it self we need to give it the log probabilities(log the softmax).

This is called the Cross-entropy loss it take does the combination log(softmax) + nll_loss, we can do it in Pytorch by calling nn.CrossEntropyLoss

#by using the class constructor

loss_func = nn.CrossEntropyLoss()

#or by using the plain function available in F

F.crossentropy(acts, targ)

people tend to use the class version, we prevent the function from taking the mean with "reduction=None"

nn.CrossEntropyLoss(reduction='none')(acts, targ)

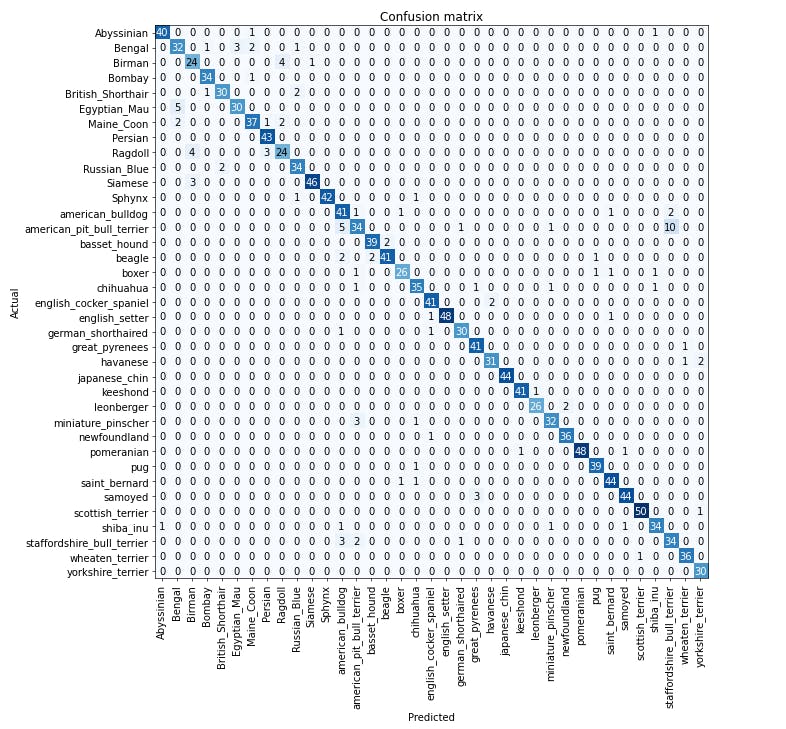

Model Interpretation

the loss we saw earlier doesn't give to a human mind that much of a detail, and it's often difficult to make judgment based on this, this is why we opt for metrics, we could for an instance plot the confusion matrix to get a sense on the miss-classified examples.

interp = ClassificationInterpritation.from_learner(learn)

interp.plot_confusion_matrix(figsize=(12,12), dpi=60)

if we take a closer look at this, it's seems difficult for us to spot miss-classified, instead we can show classes with most incorrect prediction.

interp.most_confused(min_val=5)

one thing that we are incapable of doing is being a dog breed expert, and conclude that this miss-classification is reflecting actual difficulties in recognizing these breeds.

Improving Our Model

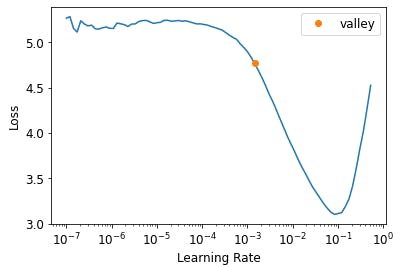

Choosing the right learning rate

choosing the right learning right can be very tricky, if we go for a large value our model will fail to converge into a local(global) minima, if we go for lower one our model will take a long time training, going through many epochs(which means another chance to memorize the training data), this could result in overfitting the training data.

Learning rate finder

this algorithm was introduced back in 2015 by Leslie Smith, the idea is to start with a very small lr, then increase it based on a coefficient calculated with loss on a mini-batch training, the process is iterative until our loss gets worse, here we can chose between:

- taking one order of magnitude less than where the minimum loss was achieved.

- taking the point where the loss was clearly decreasing.

learn = cnn_learner(dls, resnet34, metrics=error_rate)

lr_min,lr_steep = learn.lr_find()

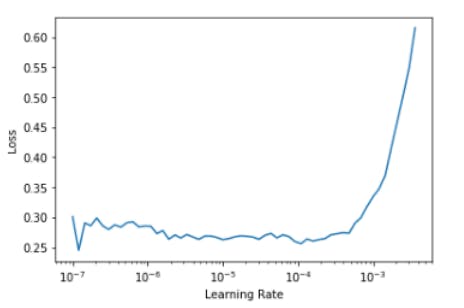

Unfreezing and Transfer Learning

One challenging thing when it comes to transfer learning, is replacing the final layers of the pre-trained model with layers that would fit our classification task, one way we can do this is by throwing the final layer of the PT model and replacing it with random layers that fits with our classification task, the next step is to freeze the the pre-trained weights and train the model for one epoch, then unfreeze the weights and train our model for as much epochs as requested.

Note that fastai automatically freezes the weights of the pre-trained model.

learn.unfreeze()

learn = cnn_learner(dls, resnet34, metrics=error_rate)

learn.fit_one_cycle(3, 3e-3)

learn.lr_find()

OUTPUT: (1.0964782268274575e-05, 1.5848931980144698e-06)

here we could select a learning rate before the loss increases, let's say 1e-5.